In feburary 2017, CPython from Bitbucket with Mercurial moved to GitHub with Git: read [Python-Dev] CPython is now on GitHub by Brett Cannon.

In 2016, I worked on speed.python.org to automate running benchmarks and make benchmarks more stable. At the end, I had a single command to:

- tune the system for benchmarks

- compile CPython using LTO+PGO

- install CPython

- install performance

- run performance

- upload results

But my tools were written for Mercurial and speed.python.org uses Mercurial revisions as keys for changes. Since the CPython repository was converted to Git, I have to remove all old results and run again old benchmarks. But before removing everyhing, I took screenshots of the most interesting pages. It would prefer to keep a copy of all data, but it would require to write new tools and I am not motivated to do that.

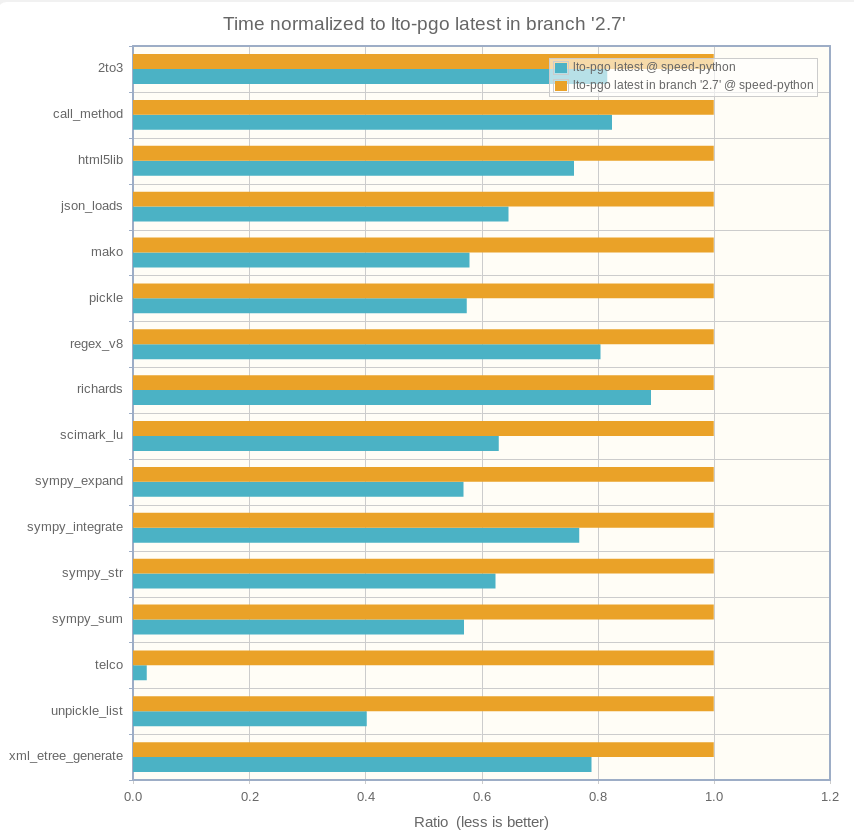

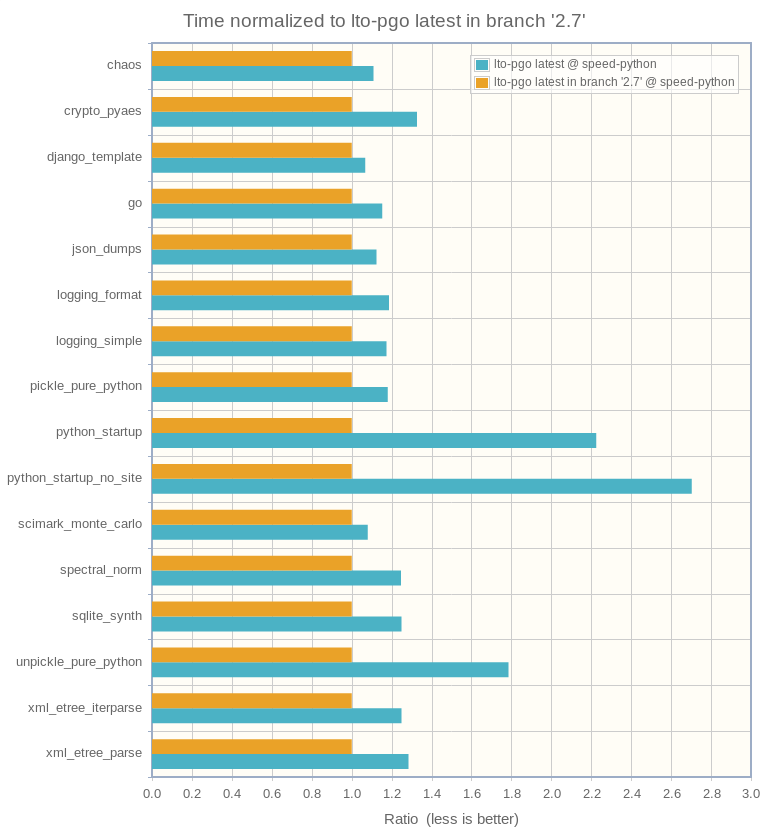

Python 3.7 compared to Python 2.7

Benchmarks where Python 3.7 is faster than Python 2.7:

Benchmarks where Python 3.7 is slower than Python 2.7:

Significant optimizations

CPython became regulary faster in 2016 on the following benchmarks.

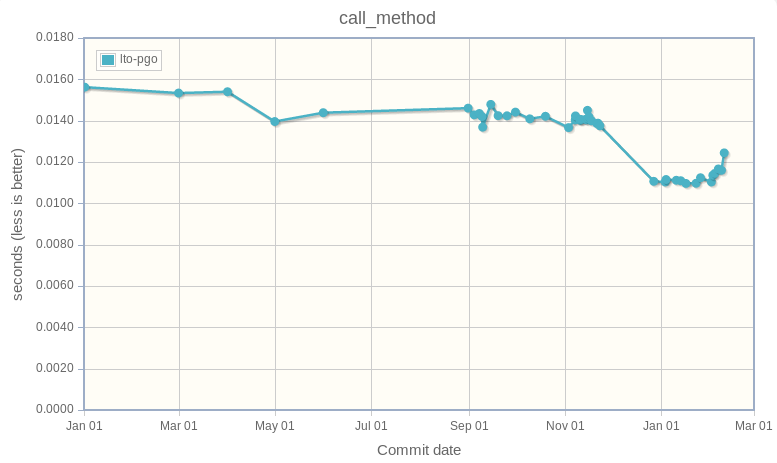

call_method, the main optimized was Speedup method calls 1.2x:

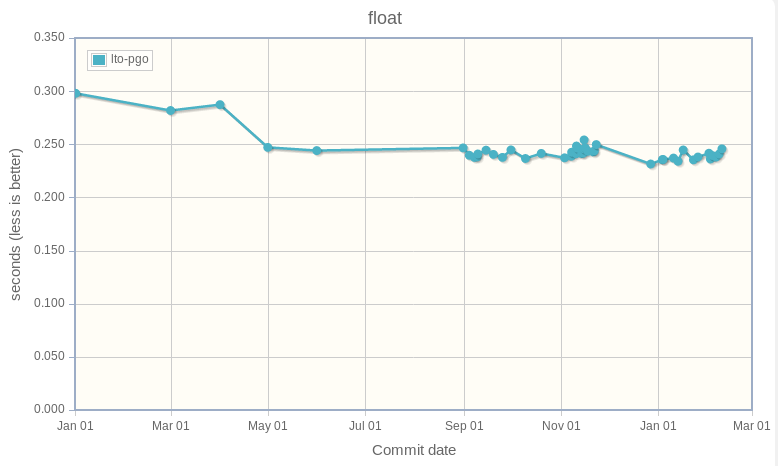

float:

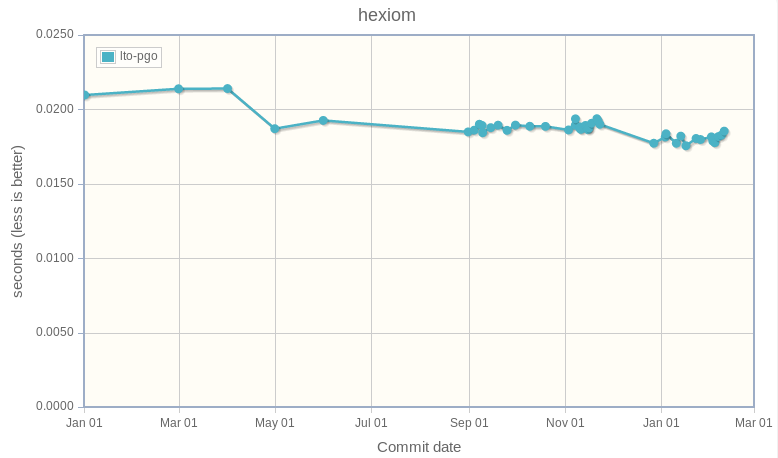

hexiom:

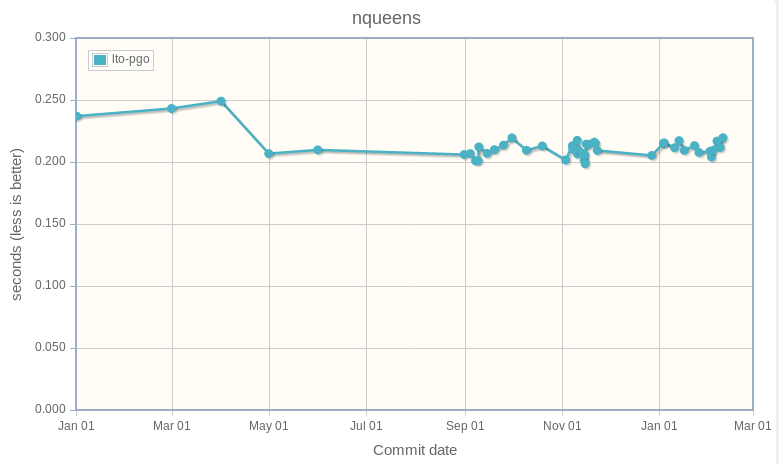

nqueens:

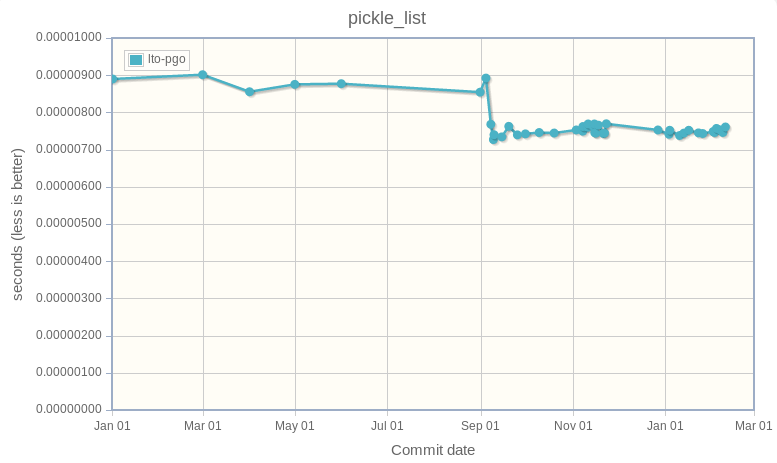

pickle_list, something happened near September 2016:

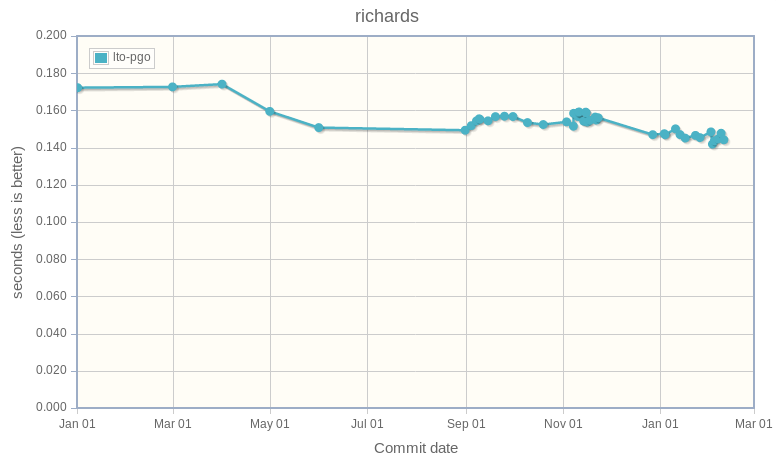

richards:

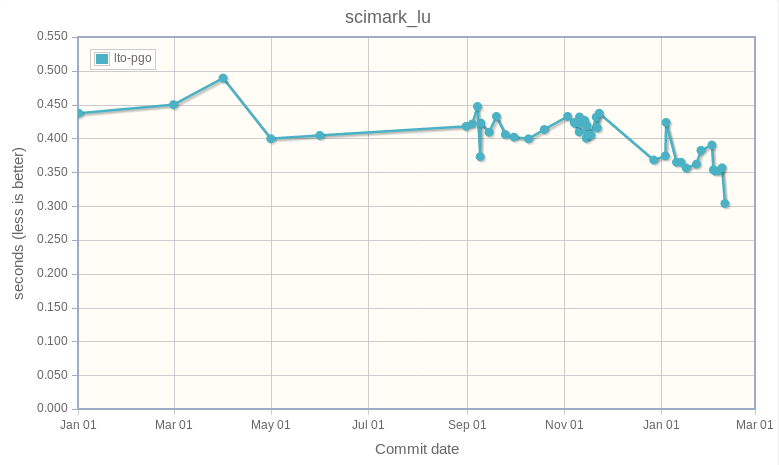

scimark_lu, I like the latest dot!

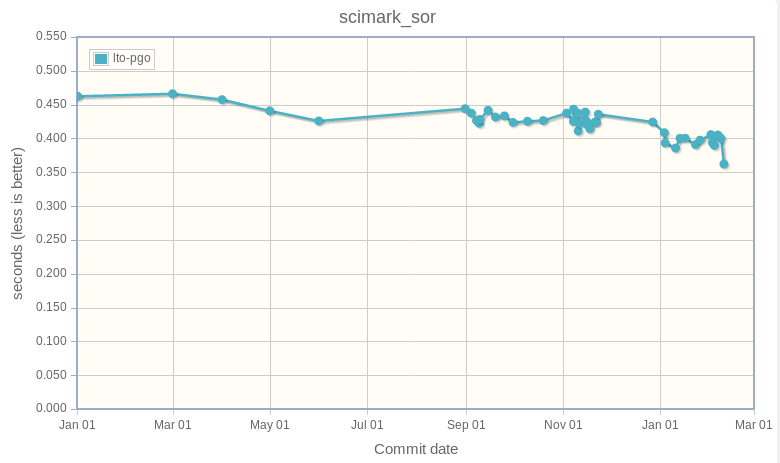

scimark_sor:

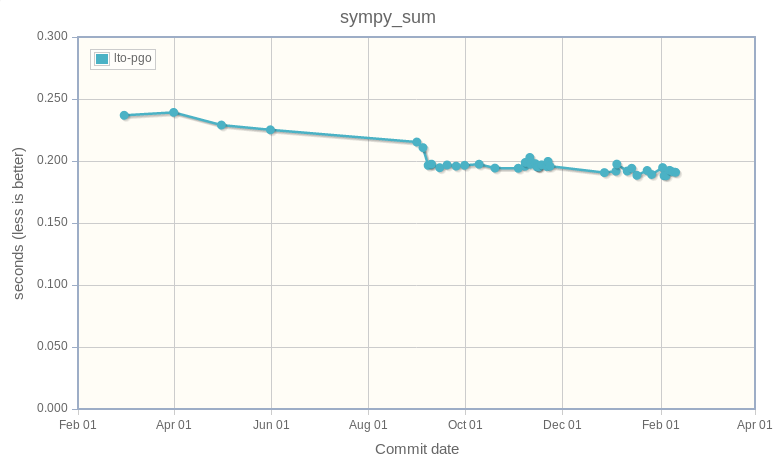

sympy_sum:

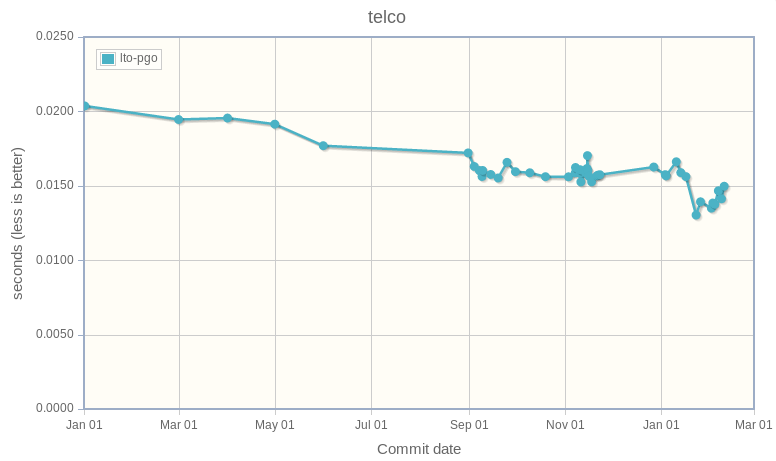

telco is one of the most impressive, it became regulary faster:

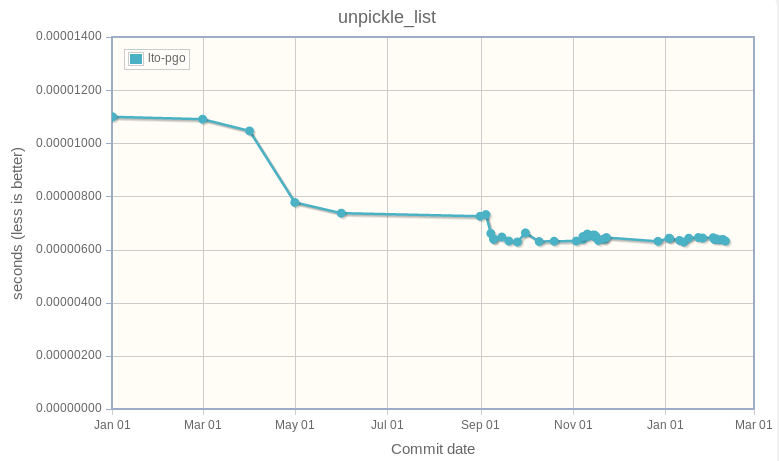

unpickle_list, something happened between March and May 2016:

The enum change

One change related to the enum module had significant impact on the two following benchmarks.

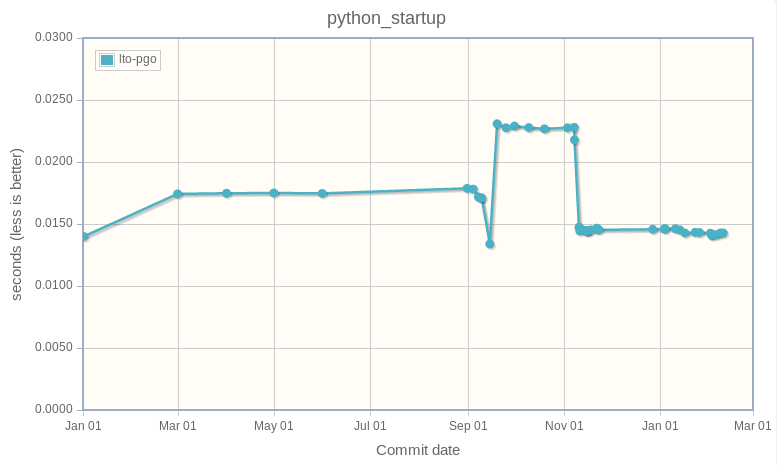

python_startup:

See "Python startup performance regression" section of My contributions to CPython during 2016 Q4 for the explanation on changes around September 2016.

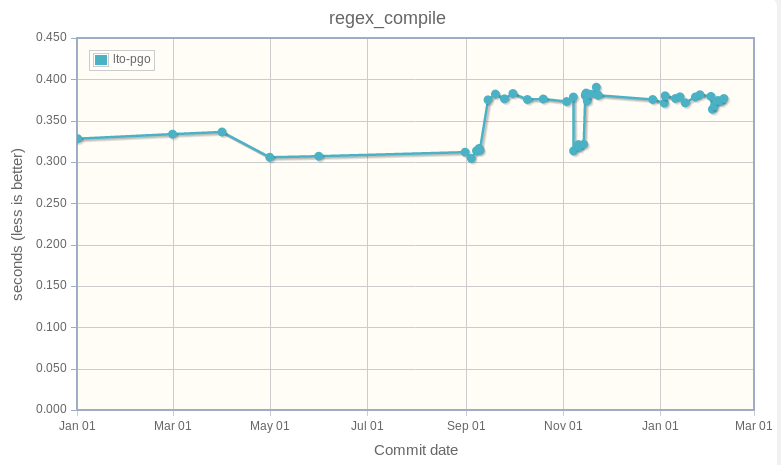

regex_compile became 1.2x slower (312 ms => 376 ms: +20%) because constants of the re module became enum objects: see convert re flags to (much friendlier) IntFlag constants (issue #28082).

Benchmarks became stable

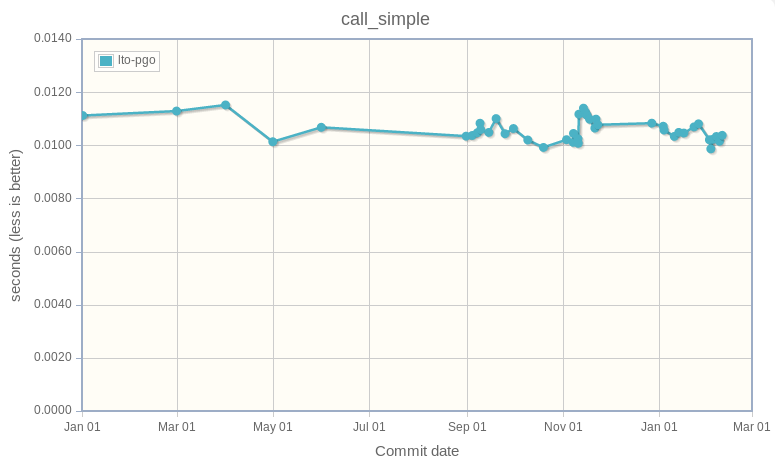

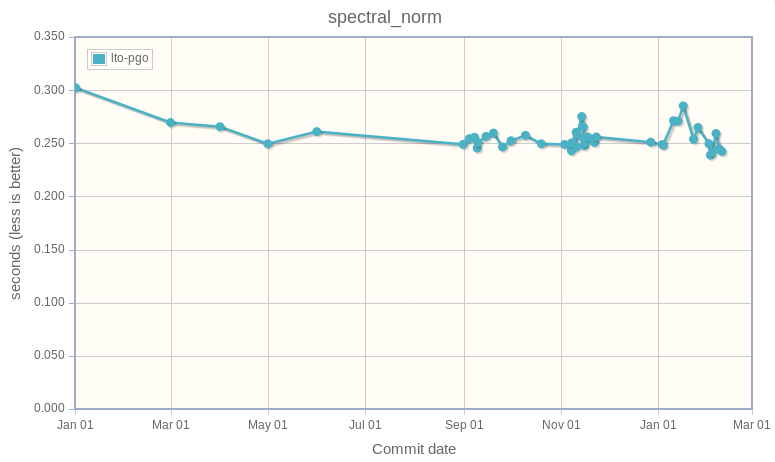

The following benchmarks are microbenchmarks which are impacted by many external factors. It's hard to get stable results. I'm happy to see that results are stable. I would say very stable compared to results when I started to work on the project!

call_simple:

spectral_norm:

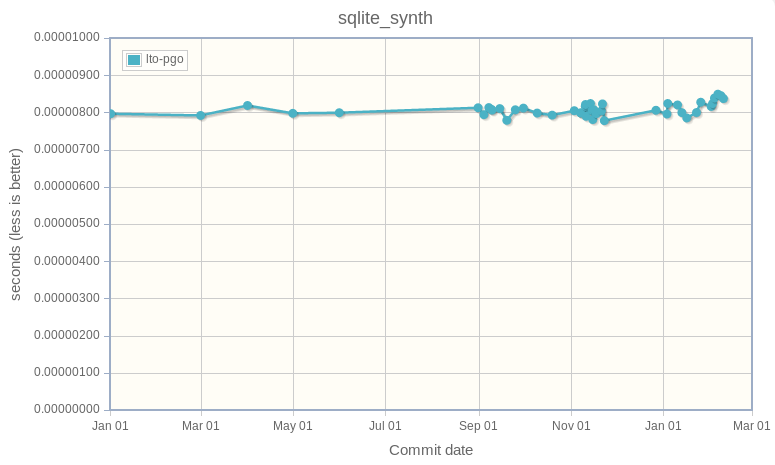

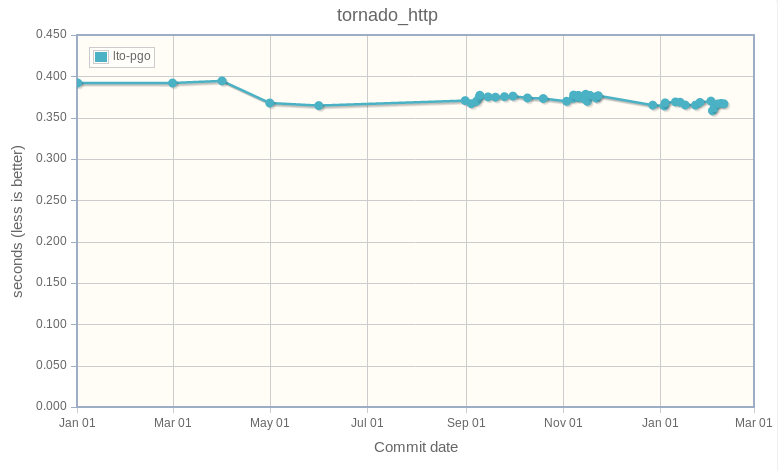

Straight line

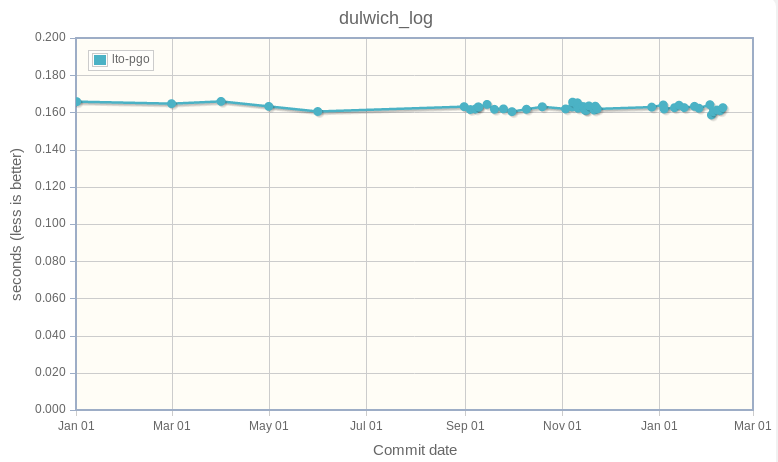

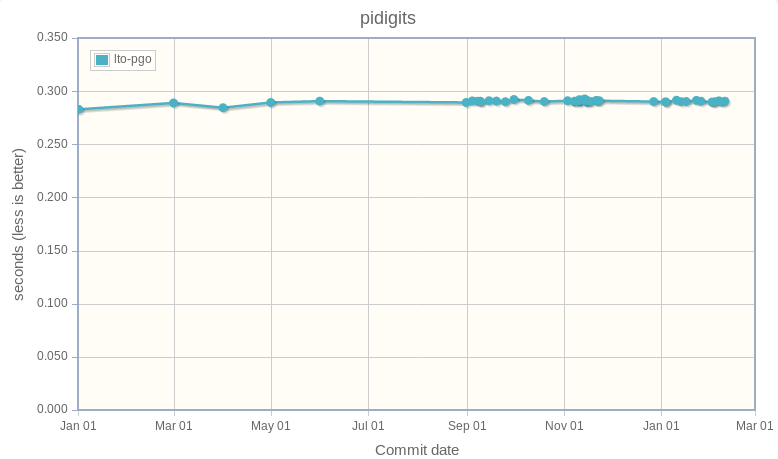

It seems like no optimization had a significant impact on the following benchmarks. You can also see that benchmarks became stable, so it's easier to detect performance regression or significant optimization.

dulwich_log:

pidigits:

sqlite_synth:

Apart something around April 2016, tornado_http result is stable:

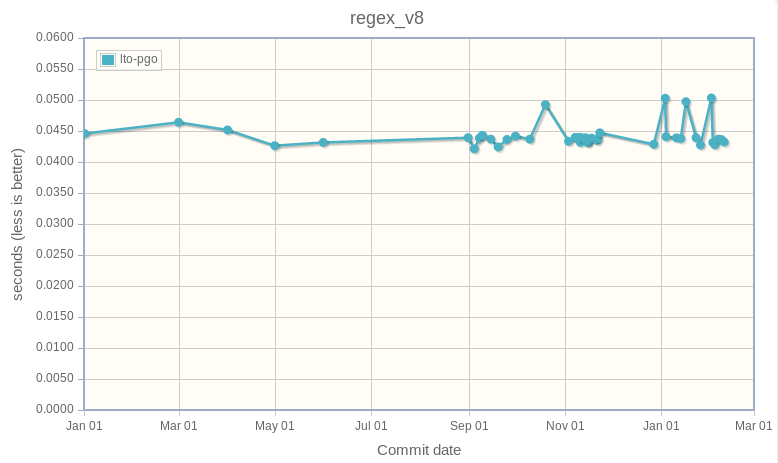

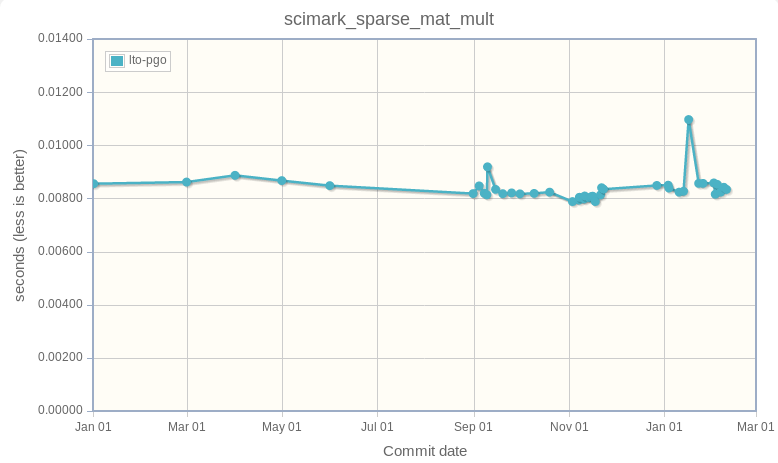

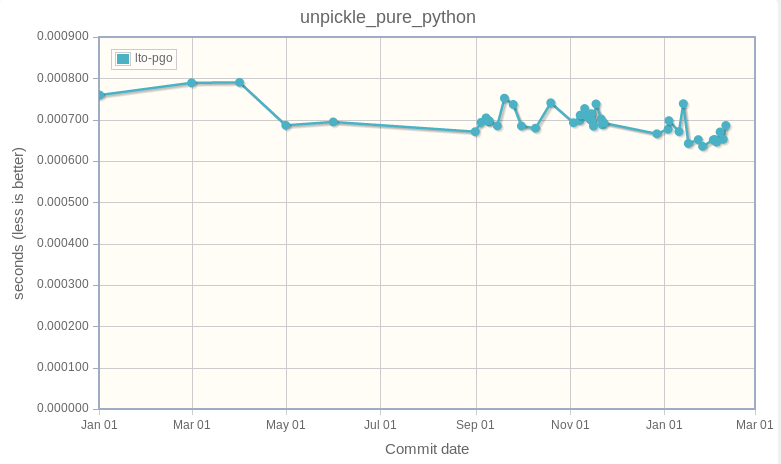

Unstable benchmarks

After months of efforts to make everything stable, some benchmarks are still unstable, even if temporary spikes are lower than before. See Analysis of a Python performance issue to see the size of previous tempoary performance spikes.

regex_v8:

scimark_sparse_mat_mult:

unpickle_pure_python:

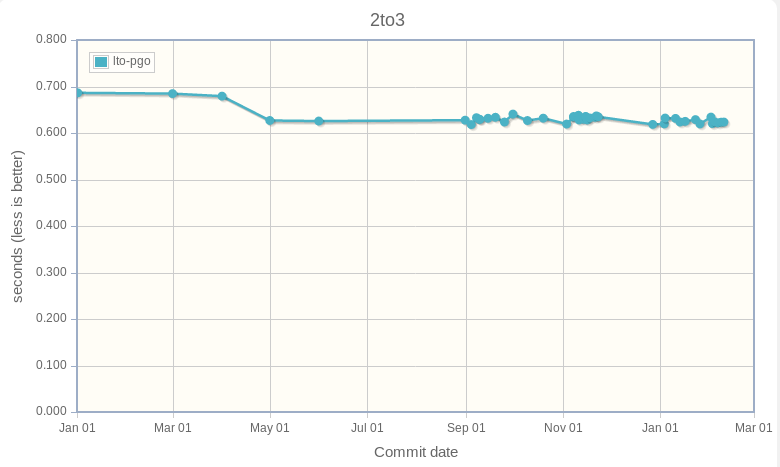

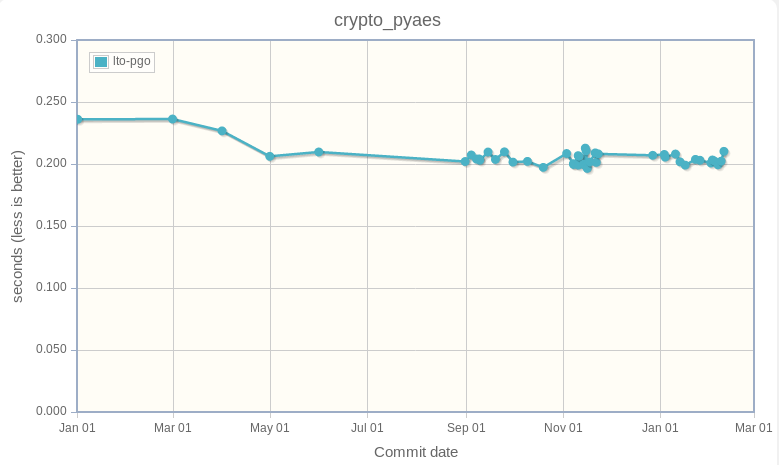

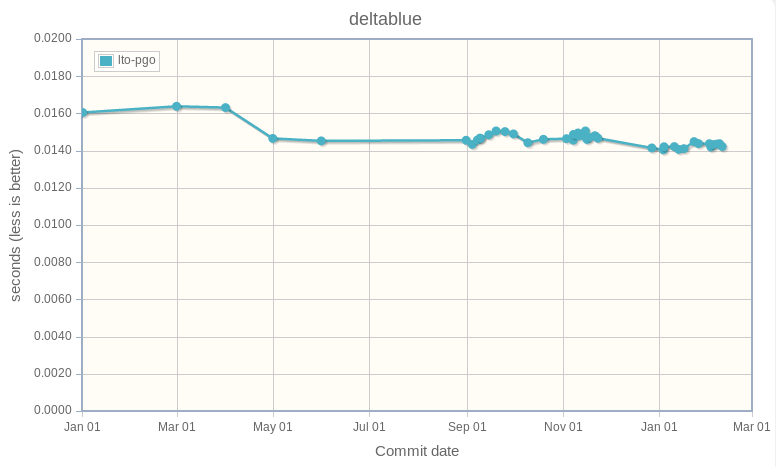

Boring results

There is nothing interesting to say on the following benchmark results.

2to3:

crypto_pyaes:

deltablue:

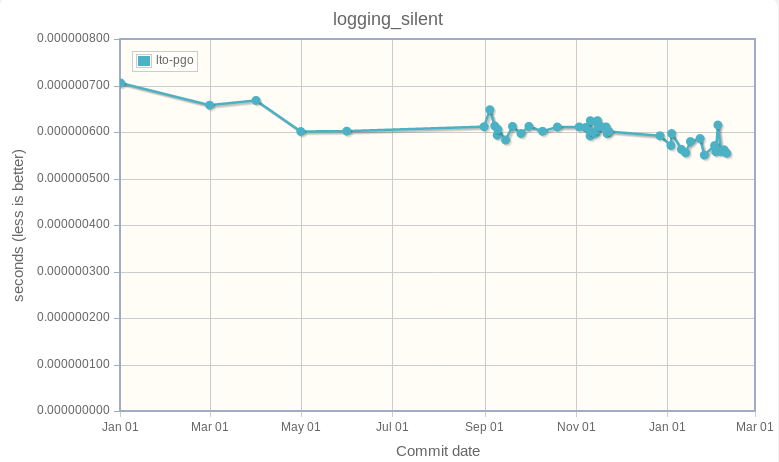

logging_silent:

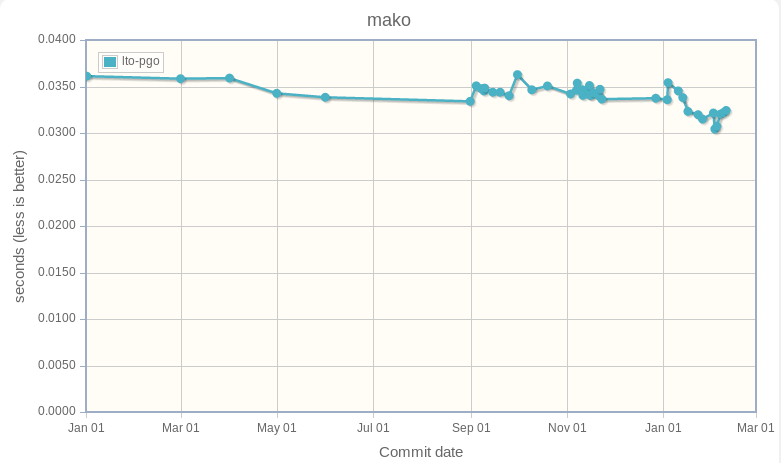

mako:

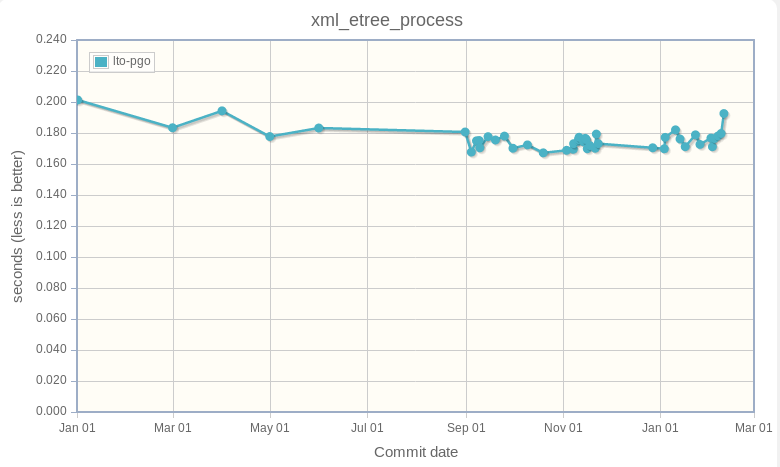

xml_etree_process:

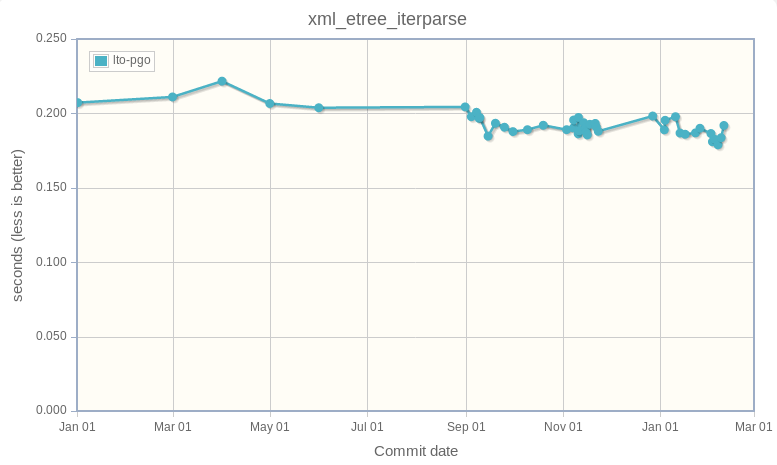

xml_etre_iterparse: